When you talk about conversion rate optimization, it’s hard not to mention A/B testing. CRO and A/B testing are sometimes thought to be synonymous. This is a misconception, though.

A/B testing is a popular practice involved with optimization. But CRO involves more than just testing. And testing-done-right is a skill unto itself with many considerations.

For example, how do you create a hypothesis and which metrics should you track? (You can discover the answers to these questions here and here.)

But what about knowing when to stop a test. How long does a test take to run?

This is a question that confuses some marketers.

Fortunately, though, you don’t need to be a trailblazer when it comes to A/B testing. There are tons of success stories that you can learn from. And below, you’ll discover a few tips and guidelines about how long you should run your A/B tests to get the most accurate results.

How To Determine Your A/B Test’s Length

Sample Size And Statistical Significance Are Key

The length of your A/B test depends on two factors: sample size and statistical significance. And these factors go hand in hand.

If your sample size is too small, your margin for error increases, thus making your results less likely to be statistically significant and reliable. In other words, you don’t have sufficient evidence to conclude that your test results are due to actual differences between your baseline and variation and not a matter of chance.

So if you want to achieve statistically significant results that you can feel confident about, you need to have a healthy sample size.

What constitutes a healthy sample size can vary from site to site. But, in general, you want to aim to have at least 1,000 visitors in order to draw strong conclusions from your results.

(Don’t forget that when you’re A/B testing, you’re splitting your sample in half and showing one variation to each half. So if you have a sample size of 1,000, you’re actually showing each variation to 500 visitors.)

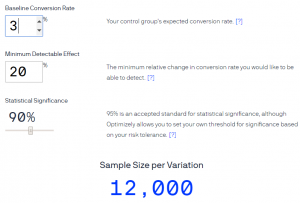

To get a more accurate number for your ideal sample size, Optimizely has a calculator you can use that also factors in statistical significance.

Moreover, in addition to making sure your sample is large enough, you also want to make sure it’s representative of your target audience and that you avoid other forms of test pollution.

And when it comes to statistical significance, you should aim for 95% or more. Anything less involves more risk.

Don’t End Your Tests Too Soon

This happens a lot. Marketers think they know the outcome of a test and so, they end their tests prematurely. But if you actually knew the answer, why would you bother running the test in the first place? Plus, if you approach a test with too many assumptions, you may inadvertently skew the results.

To get the most accurate outcome, you need to keep your assumptions in check and let the tests run their full course, which shouldn’t be less than a full week. But really, two weeks or longer is better. And, again, you need to make sure you’ve reached the gold standard of statistical significance at 95-99%.

The reason you want to let tests run for at least two weeks is because your site’s traffic and conversions can vary significantly. Tests don’t need to run for months and months, but having too much data is pretty much always better than having too little.

And if you don’t achieve a clear winner to your test after a couple months, you may need to scrap that test and start over with a new variation.

When it comes to the length of your A/B tests, patience is a virtue, and large sample sizes and statistical significance are essential.

Hopefully, you found this post useful! How do you decide how long to run your A/B tests for? Get in touch via social media and share your insights!